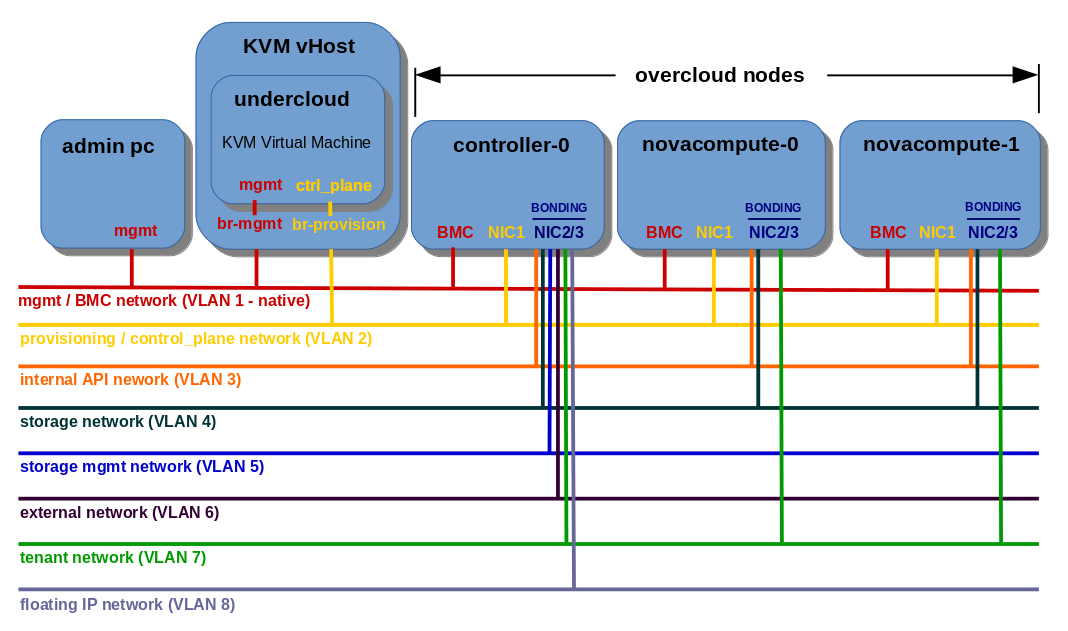

The basic OpenStack TripleO deployment utilises the so called provisioning / control plane network for all types of traffic (internal API, tenant, storage, storage management, etc…), that run across the whole OpenStack installation. The Undercloud node, which is used for Overcloud deployment, becomes a kind of a gateway for Overcloud nodes to the external network. This solution has a major disadventage: the single point of access which is here the Undercloud node, can quickly turn into a single point of failure, if Undercloud fails, cutting us out from Overcloud nodes. Moreover, any diagnostic steps, in case of network issues, bottle necks, etc., become more difficult , since all the traffic runs in one big tube, which is provisioning / control plane network.

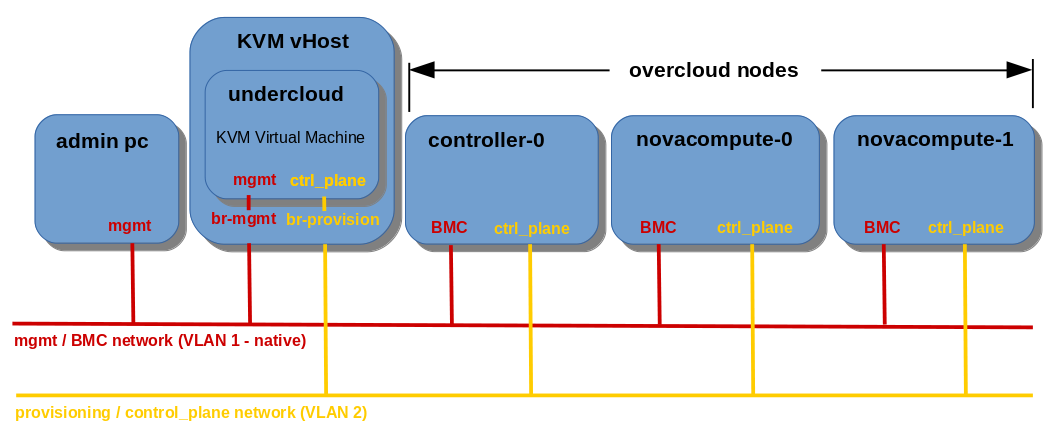

Default TripleO OpenStack network setup (no network isolation, all traffic via control plane NIC):

In the network isolation concept the OpenStack services are configured to use the isolated VLANs, assigned to the separate, dedicated NIC on each Overcloud node, different than provisioning/control plane NIC. In bonding mode VLANs are associated to the bonded interface, which consists of two slave NICs, what provides a fault tolerance in case of one slave interface fails.

In this article I would like to present two cases of network isolation concept in OpenStack:

- Deploying TripleO OpenStack using network isolation with VLANs and single NIC

- Deploying TripleO OpenStack using network isolation with VLANs and NIC bonding

Prerequisites

This article covers Overcloud deployment procedure only. In order to be able to deploy Overcloud nodes, you need an installed and configured Undercloud node including uploaded Glance images, needed for Overcloud nodes deployment. For Undercloud node deployment and configuration, check the following link:

OpenStack Pike TripleO Undercloud and Overcloud Deployment on 3 Bare Metal Servers.

All the servers used for Overcloud nodes should be equipped with BMC/IPMI module, like HP iLO, Fujitsu iRMC or Sun ILOM. Managed switches utilised for the deployment should be compliant with the 802.1q standard to allow tagged VLAN traffic.

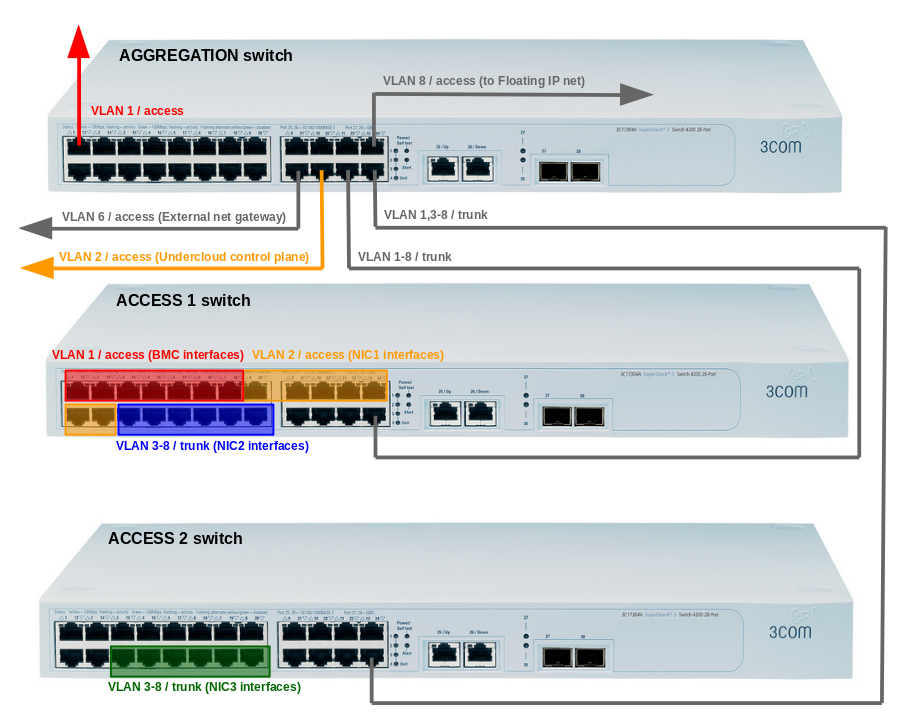

Hardware information

For this article I am using pretty old hardware, which is: three Oracle Sun Fire X4270 M2 servers equipped with 1Gbit/s interfaces and three 3Com SuperStack 3 4200 managed switches equipped with 24 x 10/100Mbit + 2 x 10/100/1000Mbit ports + 2 x GBIC ports. Deploying production cloud with isolated VLANs connected to 10/100Mbit ports on the switch doesn’t make any sense. In real life scenario, that is in case of a medium/big scale production cloud, at least 10GBit/s FCoE cards, along with 10GBit/s FCoE port switches, should be used to handle all the isolated VLANs traffic, including external network/floating IP VLANs of course. Unfortunately, 10GBit/s FCoE hardware is something I can’t afford, so for the deployments I am utilising my old hardware.

Here is the list of hardware I am using for the deployment:

Controller:

HW: Oracle Sun Fire X4270 M2 (Jupiter)

Compute 0:

HW: Oracle Sun Fire X4270 M2 (Venus)

Compute 1:

HW: Oracle Sun Fire X4270 M2 (Saturn)

Aggregation switch:

HW: 3Com 4200 SuperStack 3 3C17304A

Access 1 switch:

HW: 3Com 4200 SuperStack 3 3C17304A

Access 2 switch:

HW: 3Com 4200 SuperStack 3 3C17304A

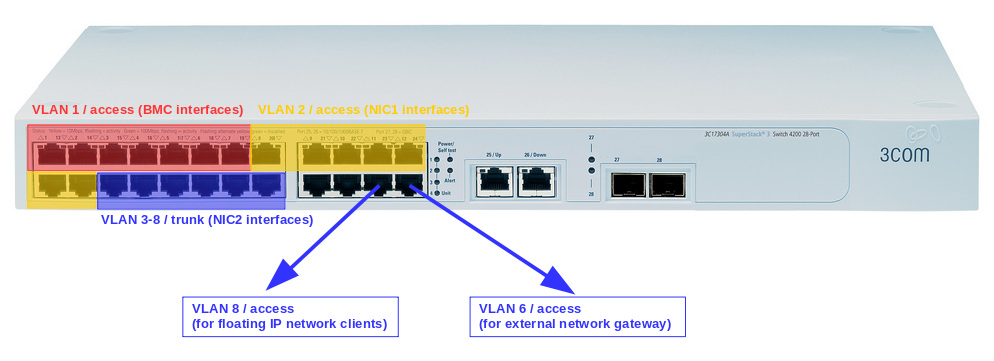

For the Single NIC based deployment I am using Access 1 switch only. For the deployment with NIC Bonding in active-backup mode I am using all three switches. For each type of deployment I have included switch panel view presenting the network connections.

Pre-deployment steps for both type of deployments

Before we start the deployment procedure, we need to prepare instackenv.json file, including our inventory, register the noded based on the instackenv.json, then introspect them, and finally provide the nodes for the deployment.

The instackenv.json file I am using for the network isolation concept looks like below:

{

"nodes":[

{

"capabilities": "profile:control,boot_option:local",

"name": "Oracle_Sun_Fire_X4270_jupiter",

"pm_type": "ipmi",

"pm_user": "admin",

"pm_password": "password",

"pm_addr": "192.168.2.16"

},

{

"capabilities": "profile:compute,boot_option:local",

"name": "Oracle_Sun_Fire_X4270_venus",

"pm_type": "ipmi",

"pm_user": "admin",

"pm_password": "password",

"pm_addr": "192.168.2.13"

},

{

"capabilities": "profile:compute,boot_option:local",

"name": "Oracle_Sun_Fire_X4270_saturn",

"pm_type": "ipmi",

"pm_user": "admin",

"pm_password": "password",

"pm_addr": "192.168.2.15"

}

]

}Let’s start from registering the nodes:

(undercloud) [stack@undercloud ~]$ openstack overcloud node import instackenv.json

Started Mistral Workflow tripleo.baremetal.v1.register_or_update. Execution ID: 6cef7fc1-7e61-4841-8897-8c3774741f45

Waiting for messages on queue '8e705515-8566-4853-9942-09ea7c9c7d39' with no timeout.

Nodes set to managed.

Successfully registered node UUID f08dfb85-44de-44ca-8f04-79f74894421a

Successfully registered node UUID 7285740b-554f-47fa-9cf9-125a2c60511f

Successfully registered node UUID 4695e59e-23c5-421a-92ef-c20e696c34f0Nodes should be now in manageable state:

(undercloud) [stack@undercloud ~]$ openstack baremetal node list

+--------------------------------------+-------------------------------+---------------+-------------+--------------------+-------------+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+--------------------------------------+-------------------------------+---------------+-------------+--------------------+-------------+

| f08dfb85-44de-44ca-8f04-79f74894421a | Oracle_Sun_Fire_X4270_jupiter | None | power off | manageable | False |

| 7285740b-554f-47fa-9cf9-125a2c60511f | Oracle_Sun_Fire_X4270_venus | None | power off | manageable | False |

| 4695e59e-23c5-421a-92ef-c20e696c34f0 | Oracle_Sun_Fire_X4270_saturn | None | power off | manageable | False |

+--------------------------------------+-------------------------------+---------------+-------------+--------------------+-------------+Introspect the nodes (this usually takes up to 15 minutes):

(undercloud) [stack@undercloud ~]$ openstack overcloud node introspect --all-manageable

Waiting for introspection to finish...

Started Mistral Workflow tripleo.baremetal.v1.introspect_manageable_nodes. Execution ID: 03f16d2c-eb09-400b-acd0-88e653192384

Waiting for messages on queue 'fbee50e9-0682-4913-8954-e68bc5edc3e2' with no timeout.

Introspection of node f08dfb85-44de-44ca-8f04-79f74894421a completed. Status:SUCCESS. Errors:None

Introspection of node 7285740b-554f-47fa-9cf9-125a2c60511f completed. Status:SUCCESS. Errors:None

Introspection of node 4695e59e-23c5-421a-92ef-c20e696c34f0 completed. Status:SUCCESS. Errors:None

Successfully introspected nodes.

Nodes introspected successfully.

Introspection completed.Provide the nodes:

(undercloud) [stack@undercloud ~]$ openstack overcloud node provide --all-manageable

Started Mistral Workflow tripleo.baremetal.v1.provide_manageable_nodes. Execution ID: 87bc7458-9fcc-47fb-8c56-8d703b1b271f

Waiting for messages on queue 'e8c97c8c-56bf-4643-9405-dbfb99226eed' with no timeout.

Successfully set nodes state to available.The Overcloud nodes are now in availabe state:

(undercloud) [stack@undercloud ~]$ openstack baremetal node list

+--------------------------------------+-------------------------------+---------------+-------------+--------------------+-------------+

| UUID | Name | Instance UUID | Power State | Provisioning State | Maintenance |

+--------------------------------------+-------------------------------+---------------+-------------+--------------------+-------------+

| f08dfb85-44de-44ca-8f04-79f74894421a | Oracle_Sun_Fire_X4270_jupiter | None | power off | available | False |

| 7285740b-554f-47fa-9cf9-125a2c60511f | Oracle_Sun_Fire_X4270_venus | None | power off | available | False |

| 4695e59e-23c5-421a-92ef-c20e696c34f0 | Oracle_Sun_Fire_X4270_saturn | None | power off | available | False |

+--------------------------------------+-------------------------------+---------------+-------------+--------------------+-------------+Finally, create templates directory inside stack user’s home:

(undercloud) [stack@undercloud ~]$ mkdir ~/templatesWe will store here YAML templates, defining our network setup, required for network-isolation based deployment.

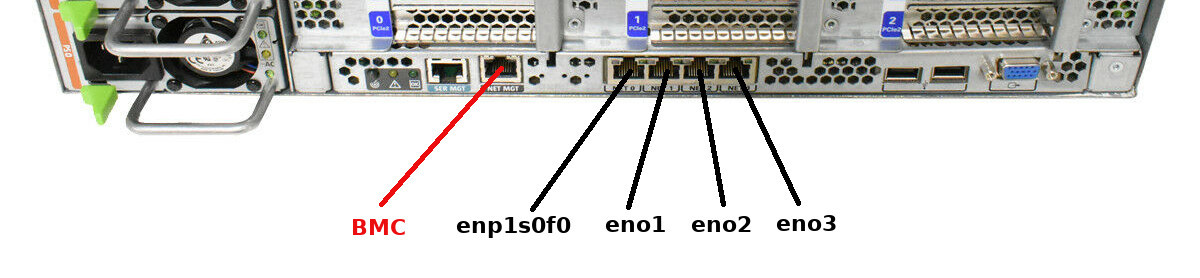

IMPORTANT: Overcloud deployment with network isolation should be made on unified environment, that is all the Overcloud servers should belong to the same vendor and model in order to have the same NIC names and order of their appearance in the OS, which is CentOS 7, deployed by default on the Overcloud nodes by RDO TripleO Mistral workflow, otherwise the deployment with network isolation will fail. For example, deploying on one HP Proliant Gen6 and two Sun Fire X4270 servers will fail since NICs on HP Proliant are discovered by CentOS 7 as enps0f{0,1} and on Sun Fire as eno{1,2,3,4}. Not even mentioning about the discrepancies in their order of appearance in the deployed OS or the amount of NICs integrated/installed in the server.

Even in the same type and make of the servers, OpenStack maps actual active (connected) interface names (enps0f0, eno1, etc…) to the OpenStack NIC names alphabetically. For example, CentOS 7 detects network interfaces in my Oracle Sun Fire X4270 M2 servers in the following order:

[root@overcloud-controller-0 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0f0: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:98:0e brd ff:ff:ff:ff:ff:ff

3: eno1: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:21:28:d7:98:0f brd ff:ff:ff:ff:ff:ff

inet 192.168.24.9/24 brd 192.168.24.255 scope global dynamic eno1

valid_lft 85398sec preferred_lft 85398sec

inet 192.168.24.14/32 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::221:28ff:fed7:980f/64 scope link

valid_lft forever preferred_lft forever

4: eno2: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:98:10 brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:9810/64 scope link

valid_lft forever preferred_lft forever

5: eno3: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:98:11 brd ff:ff:ff:ff:ff:ff

…but OpenStack maps them alphabetically as follows:

- NIC1 -> eno1

- NIC2 -> eno2

- NIC3 -> eno3

- NIC4 -> enp1s0f0

So the correct cabling on the server’s backplane for single NIC VLANs should be as follows:

- enp1s0f0 -> n/c

- eno1 -> provisioning/control plane

- eno2 -> VLAN bridge

- eno3 -> n/c

And the correct cabling for VLANs in Bond mode should be as follows:

- enp1s0f0 -> n/c

- eno1 -> provisioning/control plane

- eno2 -> VLAN bridge bond slave 1

- eno3 -> VLAN bridge bond slave 2

Deploying TripleO OpenStack using network isolation with VLANs and single NIC

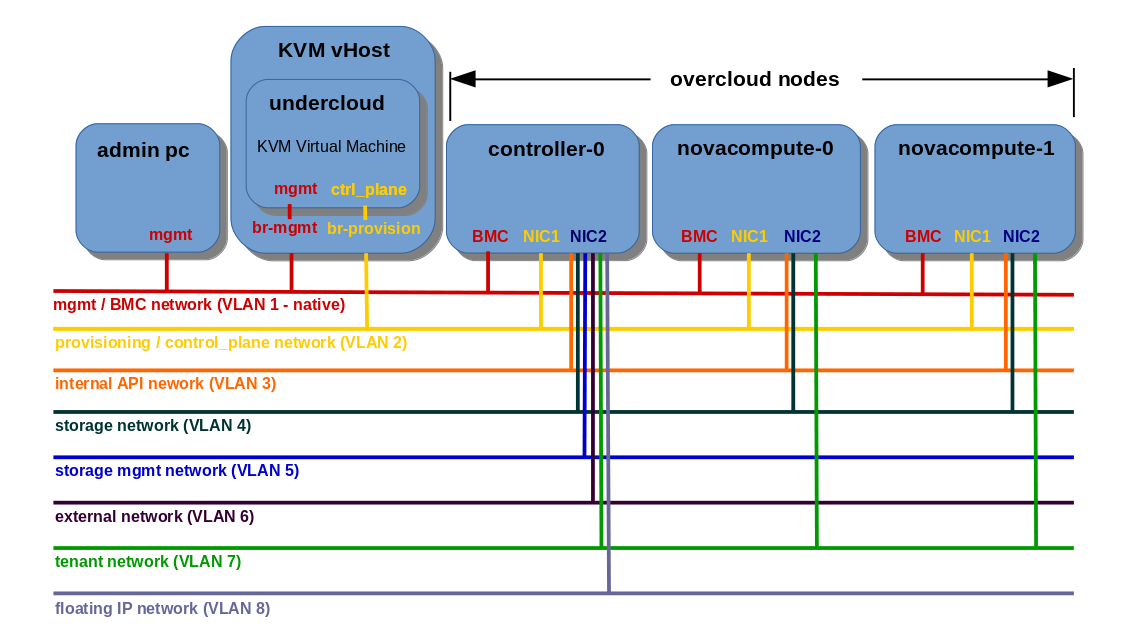

In this scenario NIC1 is used for provisioning / control plane network only, which is handled by VLAN 2, NIC2 is used for all the OpenStack core network VLANs, listed below. For each VLAN I have included VLAN tagging in brackets:

- Internal API (VLAN 3)

- Storage (VLAN 4)

- Storage Management (VLAN 5)

- External (VLAN 6)

- Tenant (VLAN 7)

- Floating IP (VLAN 8)

Network topology presenting VLAN isolation based on NIC2:

Switch front panel view presenting network setup for single NIC VLAN isolation:

Before we launch the deployment, we need to copy and modify the network-environment.yaml template file:

(undercloud) [stack@undercloud ~]$ cp /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml ~/templates/network-environment-vlan.yamlNow edit network-environment-vlan.yaml template to look like below:

resource_registry:

# Network Interface templates to use (these files must exist)

OS::TripleO::BlockStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/cinder-storage.yaml

OS::TripleO::Compute::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/compute.yaml

OS::TripleO::Controller::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/controller.yaml

OS::TripleO::ObjectStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/swift-storage.yaml

OS::TripleO::CephStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/ceph-storage.yaml

parameter_defaults:

# This section is where deployment-specific configuration is done

# CIDR subnet mask length for provisioning network

ControlPlaneSubnetCidr: '24'

# Gateway router for the provisioning network (or Undercloud IP)

ControlPlaneDefaultRoute: 192.168.24.1

EC2MetadataIp: 192.168.24.1 # Generally the IP of the Undercloud

# Customize the IP subnets to match the local environment

InternalApiNetCidr: 172.17.0.0/24

StorageNetCidr: 172.18.0.0/24

StorageMgmtNetCidr: 172.19.0.0/24

TenantNetCidr: 172.16.0.0/24

ExternalNetCidr: 10.0.0.0/24

# Customize the VLAN IDs to match the local environment

InternalApiNetworkVlanID: 3

StorageNetworkVlanID: 4

StorageMgmtNetworkVlanID: 5

TenantNetworkVlanID: 7

ExternalNetworkVlanID: 6

# Customize the IP ranges on each network to use for static IPs and VIPs

InternalApiAllocationPools: [{'start': '172.17.0.10', 'end': '172.17.0.200'}]

StorageAllocationPools: [{'start': '172.18.0.10', 'end': '172.18.0.200'}]

StorageMgmtAllocationPools: [{'start': '172.19.0.10', 'end': '172.19.0.200'}]

TenantAllocationPools: [{'start': '172.16.0.10', 'end': '172.16.0.200'}]

# Leave room if the external network is also used for floating IPs

ExternalAllocationPools: [{'start': '10.0.0.10', 'end': '10.0.0.12'}]

# Gateway router for the external network

ExternalInterfaceDefaultRoute: 10.0.0.1

# Uncomment if using the Management Network (see network-management.yaml)

# ManagementNetCidr: 10.0.1.0/24

# ManagementAllocationPools: [{'start': '10.0.1.10', 'end': '10.0.1.50'}]

# Use either this parameter or ControlPlaneDefaultRoute in the NIC templates

# ManagementInterfaceDefaultRoute: 10.0.1.1

# Define the DNS servers (maximum 2) for the overcloud nodes

#DnsServers: ["8.8.8.8","8.8.4.4"]

# List of Neutron network types for tenant networks (will be used in order)

NeutronNetworkType: 'vlan'

# The tunnel type for the tenant network (vxlan or gre). Set to '' to disable tunneling.

#NeutronTunnelTypes: 'vxlan'

# Neutron VLAN ranges per network, for example 'datacentre:1:499,tenant:500:1000':

NeutronNetworkVLANRanges: 'physnet0:9:99'

# Customize bonding options, e.g. "mode=4 lacp_rate=1 updelay=1000 miimon=100"

# for Linux bonds w/LACP, or "bond_mode=active-backup" for OVS active/backup.

#BondInterfaceOvsOptions: "bond_mode=active-backup"In above listing I am using 10.0.0.1 as a router to external network, from where we will be able to reach the Controller node directly, without having to connect via Undercloud node, that’s why 10.0.0.1 must be pingable in order for the deployment to complete successfully.

In this environment I am going to use VLANs by default, hence:

NeutronNetworkType: 'vlan'Pay attention to the VLAN ranges on physnet0 network:

NeutronNetworkVLANRanges: 'physnet0:9:99'physnet0 represents the physical network in OpenStack, based on which we can create Flat or VLAN networks in OpenStack, what does not apply to VXLAN networks. The VLAN range from 9 to 99 defines, what will be the tagging numbers for newly created VLANs by non-admin users in OpenStack. This range can be skipped by admin user during VLAN creation.

NOTE: whenever you create a new VLAN with particular VLAN tagging, this VLAN must be configured on the switch in order to be usable and working.

As you have probably noticed, there is a resource_registry section, at the top of network-environment-vlan.yaml file:

resource_registry:

# Network Interface templates to use (these files must exist)

OS::TripleO::BlockStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/cinder-storage.yaml

OS::TripleO::Compute::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/compute.yaml

OS::TripleO::Controller::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/controller.yaml

OS::TripleO::ObjectStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/swift-storage.yaml

OS::TripleO::CephStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/single-nic-vlans/ceph-storage.yamlThe above lines in my case deserve a special attention. By default, all the YAML files, these lines refer to, that is controller.yaml, compute.yaml, etc…, are in fact Heat templates including network configuration for all types of Overcloud nodes. These files enforce separated VLAN bridge interface creation on NIC1 by default in Pike release. Unfortunately, in my deployment NIC1 belongs to provisioning/control plane interface and NIC2 will be used for isolated VLANs, so I need to edit these YAML files and replace NIC1 with NIC2:

...

members:

- type: interface

name: nic2

# force the MAC address of the bridge to this interface

primary: true

- type: vlan

vlan_id:

get_param: ExternalNetworkVlanID

addresses:

- ip_netmask:

get_param: ExternalIpSubnet

routes:

- default: true

next_hop:

get_param: ExternalInterfaceDefaultRoute

- type: vlan

vlan_id:

get_param: InternalApiNetworkVlanID

addresses:

- ip_netmask:

get_param: InternalApiIpSubnet

- type: vlan

vlan_id:

get_param: StorageNetworkVlanID

addresses:

- ip_netmask:

get_param: StorageIpSubnet

- type: vlan

vlan_id:

get_param: StorageMgmtNetworkVlanID

addresses:

- ip_netmask:

get_param: StorageMgmtIpSubnet

- type: vlan

vlan_id:

get_param: TenantNetworkVlanID

addresses:

- ip_netmask:

get_param: TenantIpSubnet

...In this deployment I am deploying controller node and compute nodes, so I need to modify accordingly controller.yaml and compute.yaml files. If I deployed with ceph nodes, I would have to modify ceph-storage.yaml file too.

The below command will deploy OpenStack on one Controller node and two Compute nodes including the network isolation setup:

(undercloud) [stack@undercloud ~]$ openstack overcloud deploy --control-flavor control --control-scale 1 --compute-flavor compute --compute-scale 2 --templates -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e ~/templates/network-environment-vlan.yaml --ntp-server 192.168.24.1Note: Like any other OpenStack deployment, we need to include NTP server to synchronise OpenStack nodes, in this example I am using local NTP server launched on Undercloud node, but in production environment the external network, from where Controller is accessible, is usually routed to the outside world, so you can also use any public NTP server which is accessible. It’s up to you.

The deployment on my old Oracle Sun Fire X4270 M2 servers usually takes about 1,5 hour. During this time all the Overcloud nodes should reboot one time, when newly deployed OS is booted from the disk. Then the Mistral workflow continues with the remaining deploy steps on the nodes.

Successfull deployment final message including the Horizon dashboard IP information (10.0.0.12):

...

2019-01-26 23:13:07Z [overcloud.AllNodesDeploySteps]: CREATE_COMPLETE Stack CREATE completed successfully

2019-01-26 23:13:08Z [overcloud.AllNodesDeploySteps]: CREATE_COMPLETE state changed

2019-01-26 23:13:08Z [overcloud]: CREATE_COMPLETE Stack CREATE completed successfully

Stack overcloud CREATE_COMPLETE

Host 10.0.0.12 not found in /home/stack/.ssh/known_hosts

Overcloud Endpoint: http://10.0.0.12:5000/v2.0

Overcloud Deployed

(undercloud) [stack@undercloud ~]$Post-deployment brief check

After successfull deployment OpenStack creates overcloudrc file including Keystone admin credentials. Let’s check generated admin password:

(undercloud) [stack@undercloud ~]$ cat ~/overcloudrc | grep OS_PASSWORD

export OS_PASSWORD=etgVyE4DCFXc7WgyGMmVHKfGgNow try to connect to the Horizon using the web browser on some client PC connected to the external network router:

http://10.0.0.12/dashboardOpenStack Horizon splash screen should appear:

Log in using our admin credentials: admin / etgVyE4DCFXc7WgyGMmVHKfGg

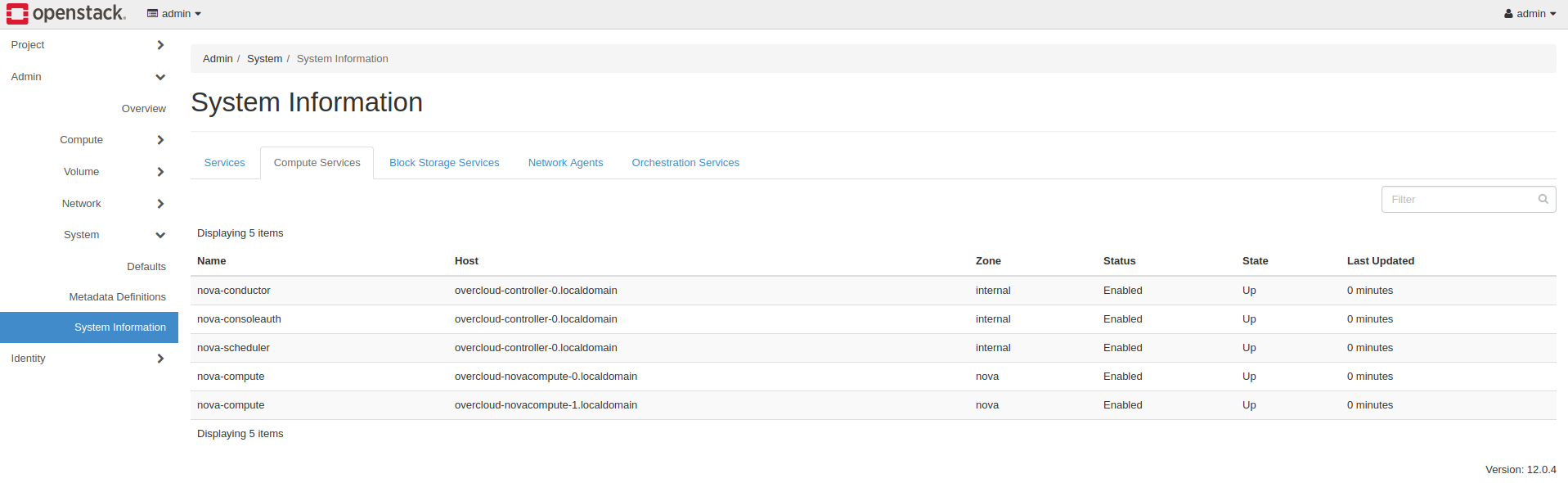

Navigate to: Admin -> System -> System Information and check all the tabs with different services, to see if the services are working.

Example screenshot presenting Compute Services tab in OpenStack Horizon dashboard:

Now log out and get back to the Undercloud node:

Verify the overcloud stack status:

(undercloud) [stack@undercloud ~]$ openstack stack list

+--------------------------------------+------------+----------------------------------+-----------------+----------------------+--------------+

| ID | Stack Name | Project | Stack Status | Creation Time | Updated Time |

+--------------------------------------+------------+----------------------------------+-----------------+----------------------+--------------+

| 0632d532-9a84-4b90-bb64-2c5daf2fdfbd | overcloud | c52fd78c2a89437b8fbb1a64c7238448 | CREATE_COMPLETE | 2019-01-26T21:48:32Z | None |

+--------------------------------------+------------+----------------------------------+-----------------+----------------------+--------------+List Overcloud nodes with corresponding IP addresses from control plane network:

(undercloud) [stack@undercloud ~]$ nova list

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+

| ae7d856a-4996-49e2-8d7e-be0e02693a08 | overcloud-controller-0 | ACTIVE | - | Running | ctlplane=192.168.24.9 |

| cfad83b9-5b11-4a97-ae56-cd7003d2f7d5 | overcloud-novacompute-0 | ACTIVE | - | Running | ctlplane=192.168.24.12 |

| 5ceee8b5-38b6-4fa3-bda3-6d9bdc6a3dd1 | overcloud-novacompute-1 | ACTIVE | - | Running | ctlplane=192.168.24.6 |

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+Log in to the Overcloud nodes from Undercloud node using heat-admin user without password, and verify network configuration.

Verify Controller node:

(undercloud) [stack@undercloud ~]$ ssh heat-admin@192.168.24.9[heat-admin@overcloud-controller-0 ~]$ sudo su -[root@overcloud-controller-0 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0f0: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:98:0e brd ff:ff:ff:ff:ff:ff

3: eno1: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:21:28:d7:98:0f brd ff:ff:ff:ff:ff:ff

inet 192.168.24.9/24 brd 192.168.24.255 scope global dynamic eno1

valid_lft 82663sec preferred_lft 82663sec

inet 192.168.24.14/32 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::221:28ff:fed7:980f/64 scope link

valid_lft forever preferred_lft forever

4: eno2: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:98:10 brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:9810/64 scope link

valid_lft forever preferred_lft forever

5: eno3: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:98:11 brd ff:ff:ff:ff:ff:ff

6: ovs-system: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether d2:31:32:1d:e0:86 brd ff:ff:ff:ff:ff:ff

7: br-ex: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 00:21:28:d7:98:10 brd ff:ff:ff:ff:ff:ff

inet 192.168.24.9/24 brd 192.168.24.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::221:28ff:fed7:9810/64 scope link

valid_lft forever preferred_lft forever

8: vlan3: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 02:31:68:b5:00:67 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.19/24 brd 172.17.0.255 scope global vlan3

valid_lft forever preferred_lft forever

inet 172.17.0.17/32 scope global vlan3

valid_lft forever preferred_lft forever

inet 172.17.0.12/32 scope global vlan3

valid_lft forever preferred_lft forever

inet6 fe80::31:68ff:feb5:67/64 scope link

valid_lft forever preferred_lft forever

9: vlan4: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether d2:16:92:6a:d2:e2 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.12/24 brd 172.18.0.255 scope global vlan4

valid_lft forever preferred_lft forever

inet 172.18.0.15/32 scope global vlan4

valid_lft forever preferred_lft forever

inet6 fe80::d016:92ff:fe6a:d2e2/64 scope link

valid_lft forever preferred_lft forever

10: vlan5: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether f2:d2:51:14:83:3b brd ff:ff:ff:ff:ff:ff

inet 172.19.0.19/24 brd 172.19.0.255 scope global vlan5

valid_lft forever preferred_lft forever

inet 172.19.0.12/32 scope global vlan5

valid_lft forever preferred_lft forever

inet6 fe80::f0d2:51ff:fe14:833b/64 scope link

valid_lft forever preferred_lft forever

11: vlan6: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 8a:d7:67:ab:0c:07 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.11/24 brd 10.0.0.255 scope global vlan6

valid_lft forever preferred_lft forever

inet 10.0.0.12/32 scope global vlan6

valid_lft forever preferred_lft forever

inet6 fe80::88d7:67ff:feab:c07/64 scope link

valid_lft forever preferred_lft forever

12: vlan7: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 26:a0:3c:97:95:89 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.13/24 brd 172.16.0.255 scope global vlan7

valid_lft forever preferred_lft forever

inet6 fe80::24a0:3cff:fe97:9589/64 scope link

valid_lft forever preferred_lft forever

13: br-int: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 52:a7:2d:c4:22:40 brd ff:ff:ff:ff:ff:ff

14: br-tun: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 16:9a:35:97:ca:40 brd ff:ff:ff:ff:ff:ff

15: vxlan_sys_4789: mtu 65520 qdisc noqueue master ovs-system state UNKNOWN group default qlen 1000

link/ether 16:d3:77:b6:85:ef brd ff:ff:ff:ff:ff:ff

inet6 fe80::14d3:77ff:feb6:85ef/64 scope link

valid_lft forever preferred_lft foreverPay attention to vlan interface names vlan{3-7} and VLAN CIDR notifications, which correspond to the vlan tagging scheme included in network-environment-vlan.yaml file.

Bridge br-ex, where all the core OpenStack VLANs 3-7 are attached, has been created on eno2 interface (eno2 has been attached to br-ex as a port):

[root@overcloud-controller-0 ~]# ovs-vsctl show

34ee2569-51ec-4e8b-8bca-8a180e4152c3

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "vxlan-ac100013"

Interface "vxlan-ac100013"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.13", out_key=flow, remote_ip="172.16.0.19"}

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "vxlan-ac10000a"

Interface "vxlan-ac10000a"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.13", out_key=flow, remote_ip="172.16.0.10"}

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port br-int

Interface br-int

type: internal

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "vlan5"

tag: 5

Interface "vlan5"

type: internal

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port "vlan7"

tag: 7

Interface "vlan7"

type: internal

Port "eno2"

Interface "eno2"

Port "vlan3"

tag: 3

Interface "vlan3"

type: internal

Port "vlan6"

tag: 6

Interface "vlan6"

type: internal

Port br-ex

Interface br-ex

type: internal

Port "vlan4"

tag: 4

Interface "vlan4"

type: internal

ovs_version: "2.7.3"Verify Compute 0 node:

(undercloud) [stack@undercloud ~]$ ssh heat-admin@192.168.24.12[heat-admin@overcloud-novacompute-0 ~]$ sudo su -[root@overcloud-novacompute-0 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0f0: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:99:64 brd ff:ff:ff:ff:ff:ff

3: eno1: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:21:28:d7:99:65 brd ff:ff:ff:ff:ff:ff

inet 192.168.24.12/24 brd 192.168.24.255 scope global dynamic eno1

valid_lft 82236sec preferred_lft 82236sec

inet6 fe80::221:28ff:fed7:9965/64 scope link

valid_lft forever preferred_lft forever

4: eno2: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:99:66 brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:9966/64 scope link

valid_lft forever preferred_lft forever

5: eno3: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:99:67 brd ff:ff:ff:ff:ff:ff

6: ovs-system: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether de:ae:85:a8:35:0b brd ff:ff:ff:ff:ff:ff

7: br-ex: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 00:21:28:d7:99:66 brd ff:ff:ff:ff:ff:ff

inet 192.168.24.12/24 brd 192.168.24.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::221:28ff:fed7:9966/64 scope link

valid_lft forever preferred_lft forever

8: vlan3: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether f6:00:66:51:2c:12 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.11/24 brd 172.17.0.255 scope global vlan3

valid_lft forever preferred_lft forever

inet6 fe80::f400:66ff:fe51:2c12/64 scope link

valid_lft forever preferred_lft forever

9: vlan4: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 4e:c0:9c:e0:18:f7 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.14/24 brd 172.18.0.255 scope global vlan4

valid_lft forever preferred_lft forever

inet6 fe80::4cc0:9cff:fee0:18f7/64 scope link

valid_lft forever preferred_lft forever

10: vlan7: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 0e:d4:63:e3:77:48 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.10/24 brd 172.16.0.255 scope global vlan7

valid_lft forever preferred_lft forever

inet6 fe80::cd4:63ff:fee3:7748/64 scope link

valid_lft forever preferred_lft forever

11: br-int: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 2a:cd:e8:74:f9:45 brd ff:ff:ff:ff:ff:ff

12: br-tun: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 46:48:ed:22:c8:43 brd ff:ff:ff:ff:ff:ff

13: vxlan_sys_4789: mtu 65520 qdisc noqueue master ovs-system state UNKNOWN group default qlen 1000

link/ether b2:b3:38:b9:55:88 brd ff:ff:ff:ff:ff:ff

inet6 fe80::b0b3:38ff:feb9:5588/64 scope link

valid_lft forever preferred_lft foreverCompute node has only three tagged vlan interfaces vlan{3,4,7}, because it utilises VLANs 3,4,7 only. Interfaces for VLAN 5 (Storage Management) and VLAN 6 (External Network) were not created here, because they are used by Controller node only.

Interface mapping for bridge br-ex in Compute is similar to Controller node:

[root@overcloud-novacompute-0 ~]# ovs-vsctl show

31bfdcb3-875d-48b6-a391-83faa0766ae1

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-tun

Interface br-tun

type: internal

Port "vxlan-ac10000d"

Interface "vxlan-ac10000d"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.10", out_key=flow, remote_ip="172.16.0.13"}

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "vxlan-ac100013"

Interface "vxlan-ac100013"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.10", out_key=flow, remote_ip="172.16.0.19"}

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "vlan7"

tag: 7

Interface "vlan7"

type: internal

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port br-ex

Interface br-ex

type: internal

Port "vlan3"

tag: 3

Interface "vlan3"

type: internal

Port "vlan4"

tag: 4

Interface "vlan4"

type: internal

Port "eno2"

Interface "eno2"

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port br-int

Interface br-int

type: internal

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

ovs_version: "2.7.3"Network interfaces configuration on Compute 1 node is analogical to Compute 0 node, there is no need to present it here.

Because we don’t have a Floating IP network yet, we should create one. I reserved VLAN 8 specially for this purpose. Floating IP network will let the tenant users to access their Instances from the outside of the cloud.

First of all, source the overcloud credencials file:

(undercloud) [stack@undercloud ~]$ source overcloudrcNote: overcloudrc file includes link to Controller IP from external network, which is 10.0.0.12. That means, you need to have a routing from Undercloud machine to external network (VLAN 6), in order to be able to execute commands on overcloud nodes. If you don’t have one, copy overcloudrc file to the Controller node, log in to the Controller as heat-admin, source the file, and execute below commands from Controller.

Create the Floating IP network based on VLAN 8. Network should have the external flag and should be shared between tenants:

(overcloud) [stack@undercloud ~]$ openstack network create --external --share --provider-network-type vlan --provider-physical-network physnet0 --provider-segment 8 --enable-port-security --project admin floating-ip-net

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2019-01-26T23:38:32Z |

| description | |

| dns_domain | None |

| id | cc4d9369-1e73-416f-8878-d8dc330673fc |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | False |

| is_vlan_transparent | None |

| mtu | 1500 |

| name | floating-ip-net |

| port_security_enabled | True |

| project_id | 8fb2422967bd40abbaaebcf862a05c73 |

| provider:network_type | vlan |

| provider:physical_network | physnet0 |

| provider:segmentation_id | 8 |

| qos_policy_id | None |

| revision_number | 4 |

| router:external | External |

| segments | None |

| shared | True |

| status | ACTIVE |

| subnets | |

| tags | |

| updated_at | 2019-01-26T23:38:32Z |

+---------------------------+--------------------------------------+Now create subnet for the Floating IP network, apply network parameters according to your network architecture:

(overcloud) [stack@undercloud ~]$ openstack subnet create --subnet-range 10.0.1.0/24 --gateway 10.0.1.1 --network floating-ip-net --allocation-pool start=10.0.1.9,end=10.0.1.99 floating-ip-subnet

+-------------------------+--------------------------------------+

| Field | Value |

+-------------------------+--------------------------------------+

| allocation_pools | 10.0.1.9-10.0.1.99 |

| cidr | 10.0.1.0/24 |

| created_at | 2019-01-26T23:39:12Z |

| description | |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 10.0.1.1 |

| host_routes | |

| id | 37da55d2-8372-4bd5-97e3-d5a6bf2fd6f0 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | floating-ip-subnet |

| network_id | cc4d9369-1e73-416f-8878-d8dc330673fc |

| project_id | 8fb2422967bd40abbaaebcf862a05c73 |

| revision_number | 0 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| tags | |

| updated_at | 2019-01-26T23:39:12Z |

| use_default_subnet_pool | None |

+-------------------------+--------------------------------------+Removing the stack

If you decide to erase the deployment and uninstall the cloud, just remove the current overcloud stack:

(undercloud) [stack@undercloud ~]$ openstack stack delete overcloud

Are you sure you want to delete this stack(s) [y/N]? yIf you also decide to remove the nodes from bare metal inventory and erase introspection results before the next deployment, just remove all the nodes:

(undercloud) [stack@undercloud ~]$ openstack baremetal node delete Oracle_Sun_Fire_X4270_jupiter

Deleted node Oracle_Sun_Fire_X4270_jupiter

(undercloud) [stack@undercloud ~]$ openstack baremetal node delete Oracle_Sun_Fire_X4270_venus

Deleted node Oracle_Sun_Fire_X4270_venus

(undercloud) [stack@undercloud ~]$ openstack baremetal node delete Oracle_Sun_Fire_X4270_saturn

Deleted node Oracle_Sun_Fire_X4270_saturnNow, when the bare metal node list is empty, you will have to repeat registration/introspection/provisioning procedure of the nodes before the next deployment.

Deploying TripleO OpenStack using network isolation with VLANs and NIC bonding

In this scenario NIC1 is used for provisioning / control plane network only, which is handled by VLAN 2, NIC2 and NIC3 become slave interfaces for bond1 inteface, working in active-backup mode, used for all the OpenStack core network VLANs (just like in single NIC mode in previous scenario):

- Internal API (VLAN 3)

- Storage (VLAN 4)

- Storage Management (VLAN 5)

- External (VLAN 6)

- Tenant (VLAN 7)

- Floating IP (VLAN 8)

Network topology presenting VLAN isolation based on NIC2/NIC3 bonding (active-backup):

Server side interface bonding is not enough to provide full failover redundancy in active-backup mode, we also need to provide switch side redundancy. That’s why I use two access switches connected together to provide failover connectivity in case of NIC2 failure on one node and simultaneous NIC3 failure on the other node. My old 3com 4200 switches do not support Multiple Spanning Tree Protocol (802.1s), so I connect both access switches via aggregation switch to avoid network loop.

Switch front panel view presenting network setup for NIC Bonding VLAN isolation:

Prior to the deployment we need to prepare network-environment.yaml file. Let’s copy it from the template file, just like we did in the previous case:

(undercloud) [stack@undercloud ~]$ cp /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml ~/templates/network-environment-bond.yamlModify network-environment-bond.yaml template to look like below:

resource_registry:

# Network Interface templates to use (these files must exist)

OS::TripleO::BlockStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/cinder-storage.yaml

OS::TripleO::Compute::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/compute.yaml

OS::TripleO::Controller::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/controller.yaml

OS::TripleO::ObjectStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/swift-storage.yaml

OS::TripleO::CephStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/ceph-storage.yaml

parameter_defaults:

# This section is where deployment-specific configuration is done

# CIDR subnet mask length for provisioning network

ControlPlaneSubnetCidr: '24'

# Gateway router for the provisioning network (or Undercloud IP)

ControlPlaneDefaultRoute: 192.168.24.1

EC2MetadataIp: 192.168.24.1 # Generally the IP of the Undercloud

# Customize the IP subnets to match the local environment

InternalApiNetCidr: 172.17.0.0/24

StorageNetCidr: 172.18.0.0/24

StorageMgmtNetCidr: 172.19.0.0/24

TenantNetCidr: 172.16.0.0/24

ExternalNetCidr: 10.0.0.0/24

# Customize the VLAN IDs to match the local environment

InternalApiNetworkVlanID: 3

StorageNetworkVlanID: 4

StorageMgmtNetworkVlanID: 5

TenantNetworkVlanID: 7

ExternalNetworkVlanID: 6

# Customize the IP ranges on each network to use for static IPs and VIPs

InternalApiAllocationPools: [{'start': '172.17.0.10', 'end': '172.17.0.200'}]

StorageAllocationPools: [{'start': '172.18.0.10', 'end': '172.18.0.200'}]

StorageMgmtAllocationPools: [{'start': '172.19.0.10', 'end': '172.19.0.200'}]

TenantAllocationPools: [{'start': '172.16.0.10', 'end': '172.16.0.200'}]

# Leave room if the external network is also used for floating IPs

ExternalAllocationPools: [{'start': '10.0.0.10', 'end': '10.0.0.12'}]

# Gateway router for the external network

ExternalInterfaceDefaultRoute: 10.0.0.1

# Uncomment if using the Management Network (see network-management.yaml)

# ManagementNetCidr: 10.0.1.0/24

# ManagementAllocationPools: [{'start': '10.0.1.10', 'end': '10.0.1.50'}]

# Use either this parameter or ControlPlaneDefaultRoute in the NIC templates

# ManagementInterfaceDefaultRoute: 10.0.1.1

# Define the DNS servers (maximum 2) for the overcloud nodes

#DnsServers: ["8.8.8.8","8.8.4.4"]

# List of Neutron network types for tenant networks (will be used in order)

NeutronNetworkType: 'vlan'

# The tunnel type for the tenant network (vxlan or gre). Set to '' to disable tunneling.

#NeutronTunnelTypes: 'vxlan'

# Neutron VLAN ranges per network, for example 'datacentre:1:499,tenant:500:1000':

# gjuszczak mod start

#NeutronExternalNetworkBridge: "''"

#NeutronBridgeMappings: 'physnet0:br-ex'

NeutronNetworkVLANRanges: 'physnet0:9:99'

# Customize bonding options, e.g. "mode=4 lacp_rate=1 updelay=1000 miimon=100"

# for Linux bonds w/LACP, or "bond_mode=active-backup" for OVS active/backup.

BondInterfaceOvsOptions: "bond_mode=active-backup"External network gateway, that is 10.0.0.1 in my case, must be pingable in order for the deployment to complete successfully.

The resource_registry includes paths to HEAT templates for all types of the overcloud nodes, that are included in the deployment. This time, unlike single NIC VLAN case, configuration included in these templates is inline with my deployment assumptions, that is NIC1/NIC2 are assigned to the bond1 interface:

...

members:

- type: ovs_bond

name: bond1

ovs_options:

get_param: BondInterfaceOvsOptions

members:

- type: interface

name: nic2

primary: true

- type: interface

name: nic3

...So there is no need to change anything here.

The below command will deploy OpenStack on one Controller node and two Compute nodes with VLAN isolation based on NIC bonding:

(undercloud) [stack@undercloud ~]$ openstack overcloud deploy --control-flavor control --control-scale 1 --compute-flavor compute --compute-scale 2 --templates -e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml -e ~/templates/network-environment-bond.yaml --ntp-server 192.168.24.1Below the example of the successfull deployment message including Horizon dashboard IP (10.0.0.12):

...

2019-02-03 17:11:05Z [overcloud.AllNodesDeploySteps]: CREATE_COMPLETE Stack CREATE completed successfully

2019-02-03 17:11:05Z [overcloud.AllNodesDeploySteps]: CREATE_COMPLETE state changed

2019-02-03 17:11:05Z [overcloud]: CREATE_COMPLETE Stack CREATE completed successfully

Stack overcloud CREATE_COMPLETE

Host 10.0.0.12 not found in /home/stack/.ssh/known_hosts

Overcloud Endpoint: http://10.0.0.12:5000/v2.0

Overcloud Deployed

(undercloud) [stack@undercloud ~]$Post-deployment brief check

Check overcloud admin password in overcloudrc file, generated automatically during the deployment:

(undercloud) [stack@undercloud ~]$ cat ~/overcloudrc | grep OS_PASSWORD

export OS_PASSWORD=etgVyE4DCFXc7WgyGMmVHKfGgLaunch your web browser and try to connect to the dashboard:

http://10.0.0.12/dashboardYou should see the Horizon dashboard login screen, use admin/etgVyE4DCFXc7WgyGMmVHKfGg credencials to log in to the dashboard.

Verify the overcloud stack status from Undercloud node:

(undercloud) [stack@undercloud ~]$ openstack stack list

+--------------------------------------+------------+----------------------------------+-----------------+----------------------+--------------+

| ID | Stack Name | Project | Stack Status | Creation Time | Updated Time |

+--------------------------------------+------------+----------------------------------+-----------------+----------------------+--------------+

| fa7d2ddf-6c14-466f-bf59-5da0e0cdf0ac | overcloud | c52fd78c2a89437b8fbb1a64c7238448 | CREATE_COMPLETE | 2019-02-03T15:45:45Z | None |

+--------------------------------------+------------+----------------------------------+-----------------+----------------------+--------------+List Overcloud nodes with the corresponding IP addresses from control plane network:

(undercloud) [stack@undercloud ~]$ nova list

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+

| ID | Name | Status | Task State | Power State | Networks |

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+

| 0699391c-564f-4066-8064-7947c1a777e8 | overcloud-controller-0 | ACTIVE | - | Running | ctlplane=192.168.24.10 |

| 10aa5dda-5ae6-49f7-80ac-f66588881588 | overcloud-novacompute-0 | ACTIVE | - | Running | ctlplane=192.168.24.8 |

| 185e5d22-9b34-4205-91ad-926ff3551665 | overcloud-novacompute-1 | ACTIVE | - | Running | ctlplane=192.168.24.11 |

+--------------------------------------+-------------------------+--------+------------+-------------+------------------------+Log in to the Overcloud nodes one by one from Undercloud node using heat-admin user without password, and verify network configuration.

Verify the Controller node:

(undercloud) [stack@undercloud ~]$ ssh heat-admin@192.168.24.10Switch to root:

[heat-admin@overcloud-controller-0 ~]$ sudo su -Check network interfaces (including VLAN NICs):

[root@overcloud-controller-0 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0f0: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:98:0e brd ff:ff:ff:ff:ff:ff

3: eno1: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:21:28:d7:98:0f brd ff:ff:ff:ff:ff:ff

inet 192.168.24.10/24 brd 192.168.24.255 scope global eno1

valid_lft forever preferred_lft forever

inet 192.168.24.6/32 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::221:28ff:fed7:980f/64 scope link

valid_lft forever preferred_lft forever

4: eno2: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:98:10 brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:9810/64 scope link

valid_lft forever preferred_lft forever

5: eno3: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:98:11 brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:9811/64 scope link

valid_lft forever preferred_lft forever

6: ovs-system: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether b6:f2:4e:73:53:90 brd ff:ff:ff:ff:ff:ff

7: br-ex: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 00:21:28:d7:98:10 brd ff:ff:ff:ff:ff:ff

inet6 fe80::444f:72ff:fe1f:1b4b/64 scope link

valid_lft forever preferred_lft forever

8: vlan3: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 4e:21:4c:87:74:30 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.10/24 brd 172.17.0.255 scope global vlan3

valid_lft forever preferred_lft forever

inet 172.17.0.19/32 scope global vlan3

valid_lft forever preferred_lft forever

inet 172.17.0.18/32 scope global vlan3

valid_lft forever preferred_lft forever

inet6 fe80::4c21:4cff:fe87:7430/64 scope link

valid_lft forever preferred_lft forever

9: vlan4: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 1a:87:c4:59:2a:24 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.16/24 brd 172.18.0.255 scope global vlan4

valid_lft forever preferred_lft forever

inet 172.18.0.15/32 scope global vlan4

valid_lft forever preferred_lft forever

inet6 fe80::1887:c4ff:fe59:2a24/64 scope link

valid_lft forever preferred_lft forever

10: vlan5: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 9e:99:1e:9b:26:58 brd ff:ff:ff:ff:ff:ff

inet 172.19.0.13/24 brd 172.19.0.255 scope global vlan5

valid_lft forever preferred_lft forever

inet 172.19.0.14/32 scope global vlan5

valid_lft forever preferred_lft forever

inet6 fe80::9c99:1eff:fe9b:2658/64 scope link

valid_lft forever preferred_lft forever

11: vlan6: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 2a:d7:91:85:e4:a9 brd ff:ff:ff:ff:ff:ff

inet 10.0.0.10/24 brd 10.0.0.255 scope global vlan6

valid_lft forever preferred_lft forever

inet 10.0.0.12/32 scope global vlan6

valid_lft forever preferred_lft forever

inet6 fe80::28d7:91ff:fe85:e4a9/64 scope link

valid_lft forever preferred_lft forever

12: vlan7: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether e2:ea:bf:dd:79:05 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.12/24 brd 172.16.0.255 scope global vlan7

valid_lft forever preferred_lft forever

inet6 fe80::e0ea:bfff:fedd:7905/64 scope link

valid_lft forever preferred_lft forever

13: br-int: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 0a:c8:02:a1:3e:42 brd ff:ff:ff:ff:ff:ff

14: br-tun: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 6a:64:51:8a:f4:4a brd ff:ff:ff:ff:ff:ff

15: vxlan_sys_4789: mtu 65520 qdisc noqueue master ovs-system state UNKNOWN group default qlen 1000

link/ether 22:97:7e:85:ea:78 brd ff:ff:ff:ff:ff:ff

inet6 fe80::2097:7eff:fe85:ea78/64 scope link

valid_lft forever preferred_lft foreverDisplay OVS configuration:

[root@overcloud-controller-0 ~]# ovs-vsctl show

bcae85d6-5e30-4cf5-a161-28180128e67b

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "vlan6"

tag: 6

Interface "vlan6"

type: internal

Port br-ex

Interface br-ex

type: internal

Port "vlan5"

tag: 5

Interface "vlan5"

type: internal

Port "vlan7"

tag: 7

Interface "vlan7"

type: internal

Port "vlan4"

tag: 4

Interface "vlan4"

type: internal

Port "bond1"

Interface "eno3"

Interface "eno2"

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

Port "vlan3"

tag: 3

Interface "vlan3"

type: internal

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "vxlan-ac10000d"

Interface "vxlan-ac10000d"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.12", out_key=flow, remote_ip="172.16.0.13"}

Port "vxlan-ac10000e"

Interface "vxlan-ac10000e"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.12", out_key=flow, remote_ip="172.16.0.14"}

Port br-tun

Interface br-tun

type: internal

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-int

Interface br-int

type: internal

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

ovs_version: "2.7.3"In above screen the external OVS bridge br-ex is based on bond1 interface, which include eno2 and eno3 slave interfaces in active-backup mode, exactly as I wanted.

Verify the Compute 0 node:

(undercloud) [stack@undercloud ~]$ ssh heat-admin@192.168.24.8[heat-admin@overcloud-novacompute-0 ~]$ sudo su -Check network interfaces (including VLAN NICs):

[root@overcloud-novacompute-0 ~]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp1s0f0: mtu 1500 qdisc mq state DOWN group default qlen 1000

link/ether 00:21:28:d7:2c:8c brd ff:ff:ff:ff:ff:ff

3: eno1: mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:21:28:d7:2c:8d brd ff:ff:ff:ff:ff:ff

inet 192.168.24.8/24 brd 192.168.24.255 scope global eno1

valid_lft forever preferred_lft forever

inet6 fe80::221:28ff:fed7:2c8d/64 scope link

valid_lft forever preferred_lft forever

4: eno2: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:2c:8e brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:2c8e/64 scope link

valid_lft forever preferred_lft forever

5: eno3: mtu 1500 qdisc mq master ovs-system state UP group default qlen 1000

link/ether 00:21:28:d7:2c:8f brd ff:ff:ff:ff:ff:ff

inet6 fe80::221:28ff:fed7:2c8f/64 scope link

valid_lft forever preferred_lft forever

6: ovs-system: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 16:c5:2a:27:e4:b6 brd ff:ff:ff:ff:ff:ff

7: br-ex: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether 00:21:28:d7:2c:8e brd ff:ff:ff:ff:ff:ff

inet6 fe80::58fd:a6ff:feed:1344/64 scope link

valid_lft forever preferred_lft forever

8: vlan3: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether f6:f2:f9:39:0d:bb brd ff:ff:ff:ff:ff:ff

inet 172.17.0.23/24 brd 172.17.0.255 scope global vlan3

valid_lft forever preferred_lft forever

inet6 fe80::f4f2:f9ff:fe39:dbb/64 scope link

valid_lft forever preferred_lft forever

9: vlan4: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether d6:45:5f:92:ab:8d brd ff:ff:ff:ff:ff:ff

inet 172.18.0.12/24 brd 172.18.0.255 scope global vlan4

valid_lft forever preferred_lft forever

inet6 fe80::d445:5fff:fe92:ab8d/64 scope link

valid_lft forever preferred_lft forever

10: vlan7: mtu 1500 qdisc noqueue state UNKNOWN group default qlen 1000

link/ether f6:d5:ee:9e:f3:d8 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.14/24 brd 172.16.0.255 scope global vlan7

valid_lft forever preferred_lft forever

inet6 fe80::f4d5:eeff:fe9e:f3d8/64 scope link

valid_lft forever preferred_lft forever

11: br-int: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether d6:49:f9:99:89:44 brd ff:ff:ff:ff:ff:ff

12: br-tun: mtu 1500 qdisc noop state DOWN group default qlen 1000

link/ether 36:4f:56:49:6f:4c brd ff:ff:ff:ff:ff:ff

13: vxlan_sys_4789: mtu 65520 qdisc noqueue master ovs-system state UNKNOWN group default qlen 1000

link/ether f2:18:57:06:18:c3 brd ff:ff:ff:ff:ff:ff

inet6 fe80::f018:57ff:fe06:18c3/64 scope link

valid_lft forever preferred_lft foreverDisplay OVS configuration:

[root@overcloud-novacompute-0 ~]# ovs-vsctl show

4a0e2e64-6049-4d89-bbc2-5dd2f03271e6

Manager "ptcp:6640:127.0.0.1"

is_connected: true

Bridge br-tun

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port br-tun

Interface br-tun

type: internal

Port "vxlan-ac10000d"

Interface "vxlan-ac10000d"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.14", out_key=flow, remote_ip="172.16.0.13"}

Port patch-int

Interface patch-int

type: patch

options: {peer=patch-tun}

Port "vxlan-ac10000c"

Interface "vxlan-ac10000c"

type: vxlan

options: {df_default="true", in_key=flow, local_ip="172.16.0.14", out_key=flow, remote_ip="172.16.0.12"}

Bridge br-int

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port patch-tun

Interface patch-tun

type: patch

options: {peer=patch-int}

Port int-br-ex

Interface int-br-ex

type: patch

options: {peer=phy-br-ex}

Port br-int

Interface br-int

type: internal

Bridge br-ex

Controller "tcp:127.0.0.1:6633"

is_connected: true

fail_mode: secure

Port "vlan7"

tag: 7

Interface "vlan7"

type: internal

Port "vlan4"

tag: 4

Interface "vlan4"

type: internal

Port "bond1"

Interface "eno3"

Interface "eno2"

Port "vlan3"

tag: 3

Interface "vlan3"

type: internal

Port br-ex

Interface br-ex

type: internal

Port phy-br-ex

Interface phy-br-ex

type: patch

options: {peer=int-br-ex}

ovs_version: "2.7.3"Compute 1 has the same IP and OVS configuration as Compute 0.

Optionally, create dedicated Floating IP network, if you need one to access your Instances from the outside using qrouter.

Source overcloudrc file, which includes Keystone credencials required to manage the cloud:

(undercloud) [stack@undercloud ~]$ source overcloudrcNote: remember that you need to have a routing from Undercloud machine to external network (VLAN 6), in order to be able to execute commands on overcloud nodes. If you don’t have one, copy overcloudrc file to the Controller node, log in to the Controller as heat-admin, source the file, and execute below commands from Controller.

Create Floating IP network, based on VLAN 8:

(overcloud) [stack@undercloud ~]$ openstack network create --external --share --provider-network-type vlan --provider-physical-network physnet0 --provider-segment 8 --enable-port-security --project admin floating-ip-netCreate subnet for the Floating IP network:

(overcloud) [stack@undercloud ~]$ openstack subnet create --subnet-range 10.0.1.0/24 --gateway 10.0.1.1 --network floating-ip-net --allocation-pool start=10.0.1.9,end=10.0.1.99 floating-ip-subnetYou have now fully operational OpenStack, deployed with isolated VLANs and you are ready to create your Tenants and Instances. Network isolation makes network traffic easier for troubleshooting in case of failures or bottle necks. Moreover, it isolates Controller node, connected to External network, from the Floating IP network, where users access their Instances, what gives additional protection against DOS/DDOS attack attempts. If you don’t have a possibility to separate External and Floating IP networks, you can use one network for Controller external IPs and Floating IPs, as a last resort, but this solution is less secure and generally not recommended.

Based on some of the new features we are looking at Stein to finally get an OOO installation set up, mostly for some of the new ironic and networking features.

It looks like these configuration files for network isolation are no longer here, but are Jinja template files. I am having trouble finding anything online about how to use these, maybe someone is waiting for April 10 to release this documentation?

I am trying to leverage a single network with VLAN approach, as many of my systems are blades and do not have a second nic option (without spending $$$$$ on extra blade switches). The new switch management features in Stein look to make this viable (pxe boot on control plane, then change native VLAN to Tenant VLAN on switch while the node boots)

Have you looked at some of the differences here between Pike and Queens/Stein? This bug report does not seem to have a solution:

https://bugzilla.redhat.com/show_bug.cgi?id=1465564

Currently the install hangs when importing nodes, so there may be more going on here. I did add some fields to the format, but the undercloud can ping all the BMC addresses (don’t know what the process is going on here when importing the instackenv.json

{

“nodes”:[

{

“name”: “cont0”,

“capabilities”: “profile:controller,boot_option:local”,

“pm_type”: “idrac”,

“ports”: [

{

“address”: “b4:e1:0f:44:96:51”,

“physical_network”: “ctlplane”,

“local_link_connection”: {

“switch_info”: “r1sx1024”,

“port_id”: “Eth1/1”,

“switch_id”: “7c:fe:90:ea:10:40”

}

}

],

“cpu”: “24”,

“memory”: “131072”,

“disk”: “900”,

“arch”: “x86_64”,

“pm_user”: “root”,

“pm_password”: “calvin”,

“pm_addr”: “10.31.1.1”,

“_comment”: “Controller1_C1S1”

},

{

“name”: “cont1”,

“capabilities”: “profile:controller,boot_option:local”,

“pm_type”: “idrac”,

“ports”: [

{

“address”: “b4:e1:0f:44:96:5e”,

“physical_network”: “ctlplane”,

“local_link_connection”: {

“switch_info”: “r1sx1024”,

“port_id”: “Eth1/2”,

“switch_id”: “7c:fe:90:ea:10:40”

}

}

],

“cpu”: “24”,

“memory”: “131072”,

“disk”: “900”,

“arch”: “x86_64”,

“pm_user”: “root”,

“pm_password”: “calvin”,

“pm_addr”: “10.31.1.2”,

“_comment”: “Controller2_C1S2”

},

{

“name”: “cont2”,

“capabilities”: “profile:controller,boot_option:local”,

“pm_type”: “idrac”,

“ports”: [

{

“address”: “b4:e1:0f:44:96:6b”,

“physical_network”: “ctlplane”,

“local_link_connection”: {

“switch_info”: “r1sx1024”,

“port_id”: “Eth1/3”,

“switch_id”: “7c:fe:90:ea:10:40”

}

}

],

“cpu”: “24”,

“memory”: “131072”,

“disk”: “900”,

“arch”: “x86_64”,

“pm_user”: “root”,

“pm_password”: “calvin”,

“pm_addr”: “10.31.1.3”,

“_comment”: “Controller3_C1S3”

},

{

“name”: “node0”,

“capabilities”: “profile:compute,boot_option:local”,

“pm_type”: “idrac”,

“ports”: [

{

“address”: “b4:e1:0f:44:96:78”,

“physical_network”: “ctlplane”,

“local_link_connection”: {

“switch_info”: “r1sx1024”,

“port_id”: “Eth1/4”,

“switch_id”: “7c:fe:90:ea:10:40”

}

}

],

“cpu”: “24”,

“memory”: “131072”,

“disk”: “900”,

“arch”: “x86_64”,

“pm_user”: “root”,

“pm_password”: “calvin”,

“pm_addr”: “10.31.1.4”,

“_comment”: “Node1_C1S4”

}

]

}

Ok, likely has less to do with the instackenv.json and more to do with the broken install. I’ll wait for Stein to be ‘official’ from tripleo, too many undefined top level and other variables. This may be why my network templates were not generated from the j2 template files, rendering all my questions moot.

Apr 4 15:43:02 undercloud puppet-user[12708]: Unknown variable: ‘::deployment_type’. (file: /etc/puppet/modules/tripleo/manifests/profile/base/database/mysql/client.pp, line: 85, column: 31)

Apr 4 15:43:04 undercloud puppet-user[12708]: Unknown variable: ‘::swift::client_package_ensure’. (file: /etc/puppet/modules/swift/manifests/client.pp, line: 12, column: 13)

Apr 4 15:45:35 undercloud puppet-user[17]: Unknown variable: ‘methods_real’. (file: /etc/puppet/modules/swift/manifests/proxy/tempurl.pp, line: 100, column: 56)

Apr 4 15:45:35 undercloud puppet-user[17]: Unknown variable: ‘incoming_remove_headers_real’. (file: /etc/puppet/modules/swift/manifests/proxy/tempurl.pp, line: 101, column: 56)

Apr 4 15:45:35 undercloud puppet-user[17]: Unknown variable: ‘incoming_allow_headers_real’. (file: /etc/puppet/modules/swift/manifests/proxy/tempurl.pp, line: 102, column: 56)

Apr 4 15:45:35 undercloud puppet-user[17]: Unknown variable: ‘outgoing_remove_headers_real’. (file: /etc/puppet/modules/swift/manifests/proxy/tempurl.pp, line: 103, column: 56)

Apr 4 15:45:35 undercloud puppet-user[17]: Unknown variable: ‘outgoing_allow_headers_real’. (file: /etc/puppet/modules/swift/manifests/proxy/tempurl.pp, line: 104, column: 56)

Apr 4 15:45:49 undercloud puppet-user[16]: Unknown variable: ‘::nova::compute::pci_passthrough’. (file: /etc/puppet/modules/nova/manifests/compute/pci.pp, line: 19, column: 38)

Apr 4 15:46:22 undercloud puppet-user[17]: Unknown variable: ‘default_store_real’. (file: /etc/puppet/modules/glance/manifests/api.pp, line: 436, column: 9)

Apr 4 15:46:22 undercloud puppet-user[17]: Unknown variable: ‘::swift::client_package_ensure’. (file: /etc/puppet/modules/swift/manifests/client.pp, line: 12, column: 13)

Apr 4 15:47:13 undercloud puppet-user[16]: Unknown variable: ‘::mistral::database_idle_timeout’. (file: /etc/puppet/modules/mistral/manifests/db.pp, line: 62, column: 40)

Apr 4 15:47:13 undercloud puppet-user[16]: Unknown variable: ‘::mistral::database_min_pool_size’. (file: /etc/puppet/modules/mistral/manifests/db.pp, line: 63, column: 40)

Apr 4 15:47:13 undercloud puppet-user[16]: Unknown variable: ‘::mistral::database_max_pool_size’. (file: /etc/puppet/modules/mistral/manifests/db.pp, line: 64, column: 40)

Apr 4 15:47:13 undercloud puppet-user[16]: Unknown variable: ‘::mistral::database_max_retries’. (file: /etc/puppet/modules/mistral/manifests/db.pp, line: 65, column: 40)

Apr 4 15:47:13 undercloud puppet-user[16]: Unknown variable: ‘::mistral::database_retry_interval’. (file: /etc/puppet/modules/mistral/manifests/db.pp, line: 66, column: 40)

Apr 4 15:47:13 undercloud puppet-user[16]: Unknown variable: ‘::mistral::database_max_overflow’. (file: /etc/puppet/modules/mistral/manifests/db.pp, line: 67, column: 40)

Hi Michael

I haven’t tested Stein yet, because it’s too fresh. Fresh releases tend to have bugs, so I usually work on older releases, which are still supported.

Grzegorz,

Yeah, that’s what I’ve found. I am not sure why all the documentation needs to vary so much between releases. Finally got my Rocky stack up without errors. Even some of the Rocky documentation was wrong on HA Controllers, and the bug reports were just ‘closed’ as ‘deal with it’. Neat! I did have to define “DnsServers” or it would attempt to use 10.0.0.1 which didn’t exist, and fail out.

openstack overcloud deploy \

–templates /usr/share/openstack-tripleo-heat-templates \

–stack rockyracoon \

–libvirt-type kvm \

–ntp-server 10.40.0.1 \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-isolation.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/network-environment.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/docker.yaml \

-e /usr/share/openstack-tripleo-heat-templates/environments/docker-ha.yaml \

-e ~/templates/network-environment-overrides.yaml \

–log-file overcloud_deployment.log

I can post the network bits in the environment-overrides file, but it doesn’t really differ from yours other than the DNS sting.

Glad you succeeded, my first deployment failure on Pike was caused by non-existing gateway 10.0.0.1 for external network, which is included in yaml by default, so I had to configure and connect one to the external network, otherwise the deployment would fail on the network setup.

Hi Grzegorz,

I have question about below files if i deploy only controller and compute still below files are needed ? if yes Could you please let know why they are required

resource_registry:

# Network Interface templates to use (these files must exist)

OS::TripleO::BlockStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/cinder-storage.yaml

OS::TripleO::ObjectStorage::Net::SoftwareConfig: /usr/share/openstack-tripleo-heat-templates/network/config/bond-with-vlans/swift-storage.yaml

Hi Syed

No, they are not needed if you are not going to deploy with separate BlockStorage and ObjectStorage nodes, I just keep these lines in my file, just in case, for future use.

Nice Article. A workarround on interface naming could be disabing the predictable network interface names feautre on the OS. See https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/networking_guide/sec-disabling_consistent_network_device_naming

Hi Miguel

Yes, I was planning to use predictable network interfaces, but to make it work on overcloud nodes during deployment I will have to prepare heat templates, which change interface naming and launch them in the early stage of deployment. This requires a lot of testing and the testing will take a while.

If you have a working heat template for changing interface names and would like to share it with me, I will be grateful 🙂

Anyway, thanks for the heads-up.