OpenStack Kilo 3 Node Installation (Controller, Network, Compute) on CentOS 7

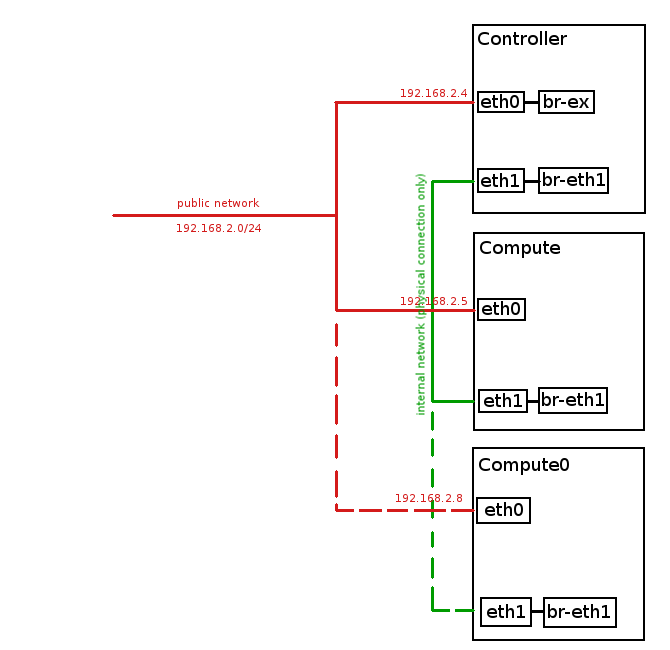

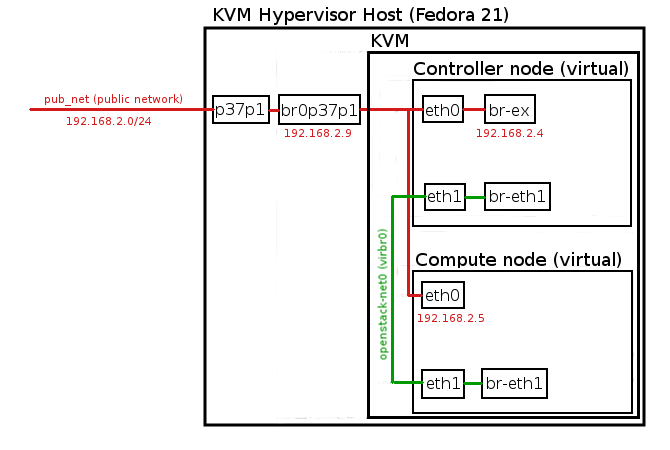

In this tutorial we will install OpenStack Kilo release from RDO repository on three nodes (Controller, Network, Compute) based on CentOS 7 operating system using packstack automated script. The following installation utilizes VLAN based internal software network infrastructure for communication between instances.

Environment used:

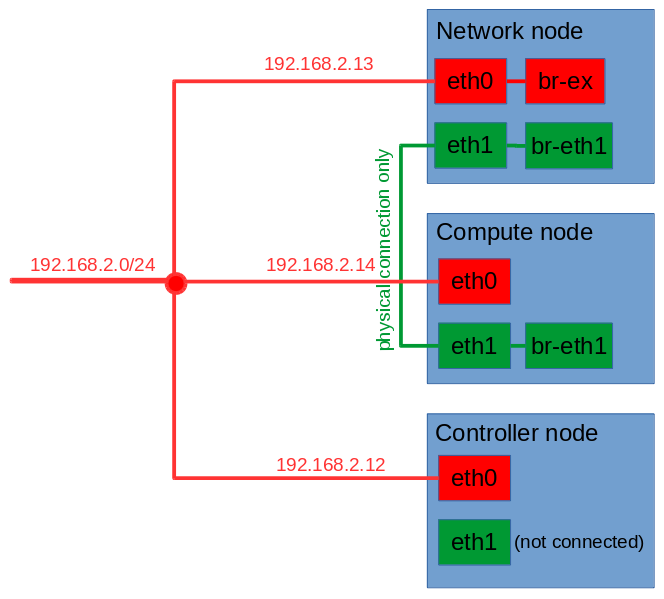

public network (Floating IP network): 192.168.2.0/24

internal network (on each node): no IP space, physical connection only (eth1)

controller node public IP: 192.168.2.12 (eth0)

network node public IP: 192.168.2.13 (eth0)

compute node public IP: 192.168.2.14 (eth0)

OS version (each node): CentOS Linux release 7.2.1511 (Core)

Read More